- 13 Storage Engines

- 13.1 Comparing Transaction and Nontransaction Engines

- 13.2 Other Storage Engines

- 13.3 Setting the Storage Engine

- 13.4 Overview of MySQL Storage Engine Architecture

- 13.5 The MyISAM Storage Engine

- 13.6 The InnoDB Storage Engine

- 13.7 The IBMDB2I Storage Engine

- 13.8 The MERGE Storage Engine

- 13.9 The MEMORY (HEAP) Storage Engine

- 13.10 The EXAMPLE Storage Engine

- 13.11 The FEDERATED Storage Engine

- 13.12 The ARCHIVE Storage Engine

- 13.13 The CSV Storage Engine

- 13.14 The BLACKHOLE Storage Engine

The BLACKHOLE storage engine acts as a

“black hole” that accepts data but throws it away and

does not store it. Retrievals always return an empty result:

mysql>CREATE TABLE test(i INT, c CHAR(10)) ENGINE = BLACKHOLE;Query OK, 0 rows affected (0.03 sec) mysql>INSERT INTO test VALUES(1,'record one'),(2,'record two');Query OK, 2 rows affected (0.00 sec) Records: 2 Duplicates: 0 Warnings: 0 mysql>SELECT * FROM test;Empty set (0.00 sec)

To enable the BLACKHOLE storage engine if you

build MySQL from source, invoke configure with

the --with-blackhole-storage-engine option.

To examine the source for the BLACKHOLE engine,

look in the sql directory of a MySQL source

distribution.

When you create a BLACKHOLE table, the server

creates a table format file in the database directory. The file

begins with the table name and has an .frm

extension. There are no other files associated with the table.

The BLACKHOLE storage engine supports all kinds

of indexes. That is, you can include index declarations in the table

definition.

You can check whether the BLACKHOLE storage

engine is available with the SHOW

ENGINES statement.

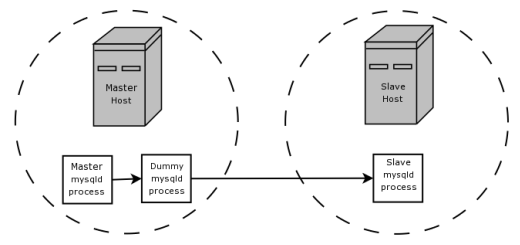

Inserts into a BLACKHOLE table do not store any

data, but if the binary log is enabled, the SQL statements are

logged (and replicated to slave servers). This can be useful as a

repeater or filter mechanism. Suppose that your application requires

slave-side filtering rules, but transferring all binary log data to

the slave first results in too much traffic. In such a case, it is

possible to set up on the master host a “dummy” slave

process whose default storage engine is

BLACKHOLE, depicted as follows:

The master writes to its binary log. The “dummy”

mysqld process acts as a slave, applying the

desired combination of replicate-do-* and

replicate-ignore-* rules, and writes a new,

filtered binary log of its own. (See

Section 16.1.3, “Replication and Binary Logging Options and Variables”.) This filtered log is

provided to the slave.

The dummy process does not actually store any data, so there is little processing overhead incurred by running the additional mysqld process on the replication master host. This type of setup can be repeated with additional replication slaves.

INSERT triggers for

BLACKHOLE tables work as expected. However,

because the BLACKHOLE table does not actually

store any data, UPDATE and

DELETE triggers are not activated:

The FOR EACH ROW clause in the trigger definition

does not apply because there are no rows.

Other possible uses for the BLACKHOLE storage

engine include:

Verification of dump file syntax.

Measurement of the overhead from binary logging, by comparing performance using

BLACKHOLEwith and without binary logging enabled.BLACKHOLEis essentially a “no-op” storage engine, so it could be used for finding performance bottlenecks not related to the storage engine itself.

The BLACKHOLE engine is transaction-aware, in the

sense that committed transactions are written to the binary log and

rolled-back transactions are not.

Column Filtering

When using row-based replication,

(binlog_format=ROW), a slave where the last

columns are missing from a table is supported, as described in the

section Section 16.4.1.5, “Replication with Differing Table Definitions on Master and Slave”.

This filtering works on the slave side, that is, the columns are copied to the slave before they are filtered out. There are at least two cases where it is not desirable to copy the columns to the slave:

If the data is confidential, so the slave server should not have access to it.

If the master has many slaves, filtering before sending to the slaves may reduce network traffic.

Master column filtering can be achieved using the

BLACKHOLE engine. This is carried out in a way

similar to how master table filtering is achieved - by using the

BLACKHOLE engine and the option

--replicate-[do|ignore]-table.

The setup for the master is:

CREATE TABLE t1 (public_col_1, ..., public_col_N,

secret_col_1, ..., secret_col_M) ENGINE=MyISAM;

The setup for the trusted slave is:

CREATE TABLE t1 (public_col_1, ..., public_col_N) ENGINE=BLACKHOLE;

The setup for the untrusted slave is:

CREATE TABLE t1 (public_col_1, ..., public_col_N) ENGINE=MyISAM;

User Comments

I got a little confused by the part that says that UPDATE and DELETE triggers don't execute on BLACKHOLE tables. If you are using a BLACKHOLE replica to relay binlogs to other "real" replicas, this does not mean that changes from UPDATE and DELETE triggers won't be applied on the real replicas, just that the triggers won't actually fire on the BLACKHOLE replica. At least that's my understanding.

Add your own comment.